HPROF Binary Heap Dumps

Get Heap Dump on an OutOfMemoryError

One can get a HPROF binary heap dump on an OutOfMemoryError for Sun JVM (1.4.2_12 or higher and 1.5.0_07 or higher), Oracle JVMs, OpenJDK JVMs, HP-UX JVM (1.4.2_11 or higher) and SAP JVM (since 1.5.0) by setting the following JVM parameter:

-XX:+HeapDumpOnOutOfMemoryError

By default, the heap dump is written to the work directory. This can be controlled using the following option to specify a directory or file name. -XX:HeapDumpPath=/dumpPath/ -XX:HeapDumpPath=./java_pidPIDNNN.hprof

-

Interactively Trigger a Heap Dump

To get heap dump on demand one can add the following parameter to the JVM and press CTRL + BREAK in the preferred moment:

-XX:+HeapDumpOnCtrlBreak

This is only available between Java 1.4.2 and Java 6.

-

HPROF agent

To use the HPROF agent to generate a dump on the end of execution, or on SIGQUIT signal use the following JVM parameter:

-agentlib:hprof=heap=dump,format=b

This was removed in Java 9 and later.

-

Alternatively, other tools can be used to acquire a heap dump:

- jmap -dump:format=b,file=<filename.hprof> <pid>

- jcmd <pid> GC.heap_dump <filename.hprof>

- JConsole (see sample usage in Basic Tutorial)

- JVisualVM This was available with a Java 7 or Java 8, but is now available from a separate download site.

- Memory Analyzer (see bottom of page)

System Dumps and Heap Dumps from IBM Virtual Machines

- All known formats

- HPROF binary heap dumps

- IBM 1.4.2 SDFF1

- IBM Javadumps

- IBM SDK for Java (J9) system dumps

- IBM SDK for Java Portable Heap Dumps

| Dump Format | Approximate size on disk | Objects, classes, and classloaders | Thread details | Field names | Field and array references | Primitive field contents | Primitive array contents | Accurate garbage-collection roots | Native memory and threads | Compression |

|---|---|---|---|---|---|---|---|---|---|---|

| HPROF | Java heap size | Y | Y | Y | Y | Y | Y | Y | N | with Gzip to around 40 percent of original size |

| IBM system dumps | Java heap size + 30 percent | Y | Y | Y | Y | Y | Y | Y | Y | with Zip or jextract to around 20 percent of original size |

| IBM PHD | 20 percent of Java heap size | Y | with Javacore2 | N | Y | N | N | N | N | with Gzip to around 20 percent of original size |

Older versions of IBM Java (e.g. < 5.0SR12, < 6.0SR9) require running jextract on the operating system core dump which produced a zip file that contained the core dump, XML or SDFF file, and shared libraries. The IBM DTFJ feature still supports reading these jextracted zips although IBM DTFJ feature version 1.12.29003.201808011034 and later cannot read IBM Java 1.4.2 SDFF files, so MAT cannot read them either. Dumps from newer versions of IBM Java do not require jextract for use in MAT since DTFJ is able to directly read each supported operating system's core dump format. Simply ensure that the operating system core dump file ends with the .dmp suffix for visibility in the MAT Open Heap Dump selection. It is also common to zip core dumps because they are so large and compress very well. If a core dump is compressed with .zip, the IBM DTFJ feature in MAT is able to decompress the ZIP file and read the core from inside (just like a jextracted zip). The only significant downsides to system dumps over PHDs is that they are much larger, they usually take longer to produce, they may be useless if they are manually taken in the middle of an exclusive event that manipulates the underlying Java heap such as a garbage collection, and they sometimes require operating system configuration ( Linux, AIX) to ensure non-truncation.

In recent versions of IBM Java (> 6.0.1), by default, when an OutOfMemoryError is thrown, IBM Java produces a system dump, PHD, javacore, and Snap file on the first occurrence for that process (although often the core dump is suppressed by the default 0 core ulimit on operating systems such as Linux). For the next three occurrences, it produces only a PHD, javacore, and Snap. If you only plan to use system dumps, and you've configured your operating system correctly as per the links above (particularly core and file ulimits), then you may disable PHD generation with -Xdump:heap:none. For versions of IBM Java older than 6.0.1, you may switch from PHDs to system dumps using -Xdump:system:events=systhrow,filter=java/lang/OutOfMemoryError,request=exclusive+prepwalk -Xdump:heap:none

In addition to an OutOfMemoryError, system dumps may be produced using operating system tools (e.g. gcore in gdb for Linux, gencore for AIX, Task Manager for Windows, SVCDUMP for z/OS, etc.), using the IBM and OpenJ9 Java APIs, using the various options of -Xdump, using Java Surgery, and more.

Versions of IBM Java older than IBM JDK 1.4.2 SR12, 5.0 SR8a and 6.0 SR2 are known to produce inaccurate GC root information.

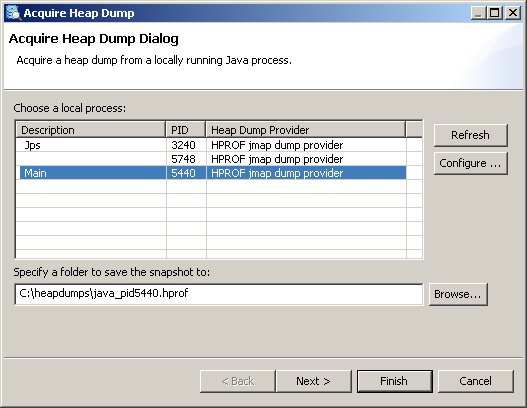

Acquire Heap Dump from Memory Analyzer

If the Java process from which the heap dump is to be acquired is on the same machine as the Memory Analyzer, it is possible to acquire a heap dump directly from the Memory Analyzer. Dumps acquired this way are directly parsed and opened in the tool.

Acquiring the heap dump is VM specific. Memory Analyzer comes with several so called heap dump providers - for OpenJDK, Oracle and Sun based VMs (needs a OpenJDK, Oracle or Sun JDK with jmap) and for IBM VMs (needs an IBM JDK or JRE). Also extension points are provided for adopters to plug-in their own heap dump providers.

To trigger a heap dump from Memory Analyzer open the menu item. Try Acquire Heap Dump now.

Depending on the concrete execution environment the pre-installed heap dump providers may work with their default settings and in this case a list of running Java processes should appear: To make selection easier, the order of the Java processes can be altered by clicking on the column titles for pid or Heap Dump Provider.

One can now select from which process a heap dump should be acquired, provide a preferred location for the heap dump and press Finish to acquire the dump. Some of the heap dump providers may allow (or require) additional parameters (e.g. type of the heap dump) to be set. This can be done by using button to get to the Heap Dump Provider Arguments page of the wizard.

Configuring the Heap Dump Providers

If the process list is empty try to configure the available heap dump providers. To do this press Configure... , select a matching provider from the list and click on it. You can see then what are the required settings and specify them. will then apply any changed settings, and refresh the JVM list if any settings have been changed. will return to the current JVM list without applying any changed settings. To then apply the changed settings reenter and exit the Configure Heap Dump Providers... page as follows:

If a process is selected before pressing Configure... then the corresponding dump provider will be selected on entering the Configure Heap Dump Providers... page.

- HPROF jmap dump provider

- This provider uses the jps command supplied with an OpenJDK or Oracle based JDK to list the running JVMs on the system. The provider then uses the jmap command to get the chosen JVM to generate an HPROF dump. As an alternative the provider can use the jcmd to both list running JVMs and to generate dumps. This provider requires a JDK (Java development kit), not a JRE (Java runtime environment) for those commands. IBM JDKs do not have the jps or jmap commands. The commands on an OpenJ9 JDK may not work well enough for this provider to work with those JVMs.

- Attach API

- This uses the com.sun.tools.attach or com.sun.tools.attach or com.ibm.tools.attach APIs to list the JVMs and then to attach to the chosen JVM to generate a dump. The Attach API is supplied as part of IBM JDKs and JREs, but is only supplied as part of Oracle or OpenJDK JDKs in tools.jar and not as part of JREs. Therefore if Memory Analyzer is run with an OpenJDK or Oracle JRE then this dump provider will not be available. The com.sun.tools.attach API is available on Oracle and OpenJDK JDKs, on OpenJ9 JDKs and JREs and IBM JDKs and JREs version Java 8 SR5 or later. The com.ibm.tools.attach API is available on earlier IBM JDKs and JREs. The Attach API dump provider automatically uses whichever Attach API is available, and loads tools.jar if required. The Attach API with Java 9 or later does not permit a Java process to connect to itself, so MemoryAnalyzer cannot generate a dump of itself using the Attach API dump provider. Use another dump provider instead.

- Attach API with helper JVM

-

If Memory Analyzer is run with an OpenJDK or Oracle JRE then this dump

provider can be used with an OpenJDK or Oracle JDK by providing a path

to a

java

executable. The dump provider will load the

tools.jar

if required.

so that the

com.sun.tools.attach

API

is accessible. This provider can also be used to list JVMs

of a

different type from the JVM used to run Memory Analyzer.

For example

dumps from IBM JVMs can be generated by specifying a

path to

an IBM

JVM

java

command even when Memory Analyzer is run with an OpenJDK or Oracle JVM.

If a path to a jcmd executable is provided then this command will be used to generate a list of running JVMs and to generate the dumps.

Options

- Dump Type

- System

- These process core dumps are generated using the com.ibm.jvm.Dump.SystemDump() method called by an agent library generated and loaded into the target JVM by Memory Analyzer. These dumps can be generated by IBM and OpenJ9 JVMs.

- Heap

- These Portable Heap Dumps are generated using the com.ibm.jvm.Dump.HeapDump() method called by an agent library generated and loaded into the target JVM by Memory Analyzer. These dumps can be generated by IBM and OpenJ9 JVMs.

- Java

- These Javacore dumps are generated using the com.ibm.jvm.Dump.JavaDump() method called by an agent library generated and loaded into the target JVM by Memory Analyzer. These dumps can be generated by IBM and OpenJ9 JVMs.

- HPROF

- These HPROF dumps are generated using the com.sun.management:type=HotSpotDiagnostic MXBean called in an agent library generated and loaded into the target JVM by Memory Analyzer. These dumps can be generated by Oracle and OpenJDK JVMs.

- Other options

- compress

-

System dumps can be processed using jextract which compressed the dump and also adds extra system information so that the dump could be moved to another machine.

Portable Heap Dump (PHD) files generated with the Heap option can be compressed using the gzip compressor to reduce the file size.

HPROF files can be compressed using the Gzip compressor to reduce the file size. A compressed file may take longer to parse in Memory Analyzer, and running queries and reports and reading fields from objects may take longer.

- chunked

- OpenJDK 15 and later can generate compressed HPROF files by using the -gz=1 in the command jcmd <pid> GC.heap_dump -gz=1 <filename.hprof> which compresses the HPROF file using gzip, but in chunks so that random access to the file is quicker. Memory Analyzer version 1.12 and later can read this format, and with this option and compress the HPROF dump provider generates HPROF files compressed in chunks, for faster read access than normal gzip compressed files.

- live

- On IBM JVMs this forces a garbage collection before a dump is taken. This could affect the performance of the program running on the target JVM, but may reduce the size of PHD Heap dumps. On Oracle and OpenJDK JVMs this dumps only live objects into the HPROF file and should reduce its size. By default, Memory Analyzer removes the non-live objects from the dump as it indexes the dump and builds the snapshot, and puts information about those objects in the unreachable objects histogram. If this histogram is used or the 'keep unreachable objects' option is set then the live option should not be set as it will remove required information.

- listattach

- This is used when listing JVMs using the Attach API. The dump provider then attaches to each running JVM in the list and extracts more information to give a better description. This can take a little longer, so if the list operation is cancelled then this option is disabled so the next listing will be faster. The option can be reenabled if required.

- dumpdir

- IBM JVMs produce dumps in a directory specified by the target JVM, not by Memory Analyzer. Memory Analyzer needs to find the dump it caused to be generated, and looks in the target JVM user.dir for the dump files. If this is not the right place then Memory Analyzer will not find the generated dump, so the user would have to find it manually afterwards. If the user knows where the target JVM will produce dumps then it can be entered using this option.

- dumptemplate

- This gives an example file name to be used for the dump. The time and date is inserted into the name using substitution variable 0, for example {0,date,yyyyMMdd.HHmmss} . The process identifier is inserted using substitution variable 1, for example {1,number,0} . The instance number, used to make the dump file unique, is inserted using substitution variable 2, for example {2,number,0000} . The extra 0000 is a standard MessageFormat modifier giving the minimum number of digits to use.

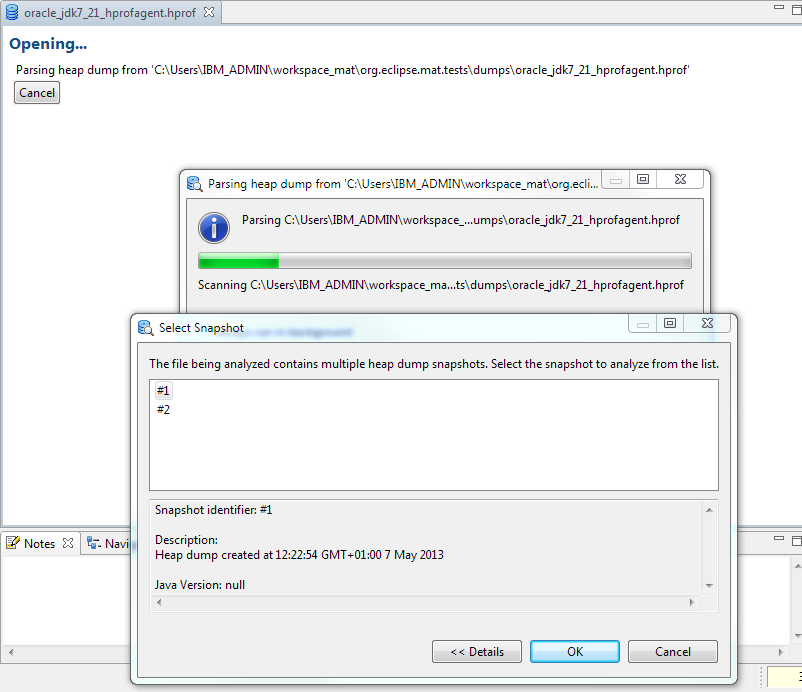

Multiple snapshots in one heap dump

-agentlib:hprof=heap=dump,format=bMemory Analyzer 1.2 and earlier handled this situation by choosing the first heap dump snapshot found unless another was selected via an environment variable or MAT DTFJ configuration option.

Memory Analyzer 1.3 handles this situation by detecting the multiple dumps, then presenting a dialog for the user to select the required snapshot.

The index files generated have a component in the file name from the snapshot identifier, so the index files from each snapshot can be distinguished. This means that multiple snapshots from one heap dump file can be examined in Memory Analyzer simultaneously. The heap dump history for the file remembers the last snapshot selected for that file, though when the snapshot is reopened via the history the index file is also shown in the history. To open another snapshot in the dump, close the first snapshot, then reopen the heap dump file using the File menu and another snapshot can be chosen to be parsed. The first snapshot can then be reopened using the index file in the history, and both snapshots can be viewed at once.

Summary

The following table shows the availability of VM options and tools on the various platforms:

| Vendor | Release | VM Parameter | JVM Tools | SAP Tool | Attach | MAT | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| On out of memory | On Ctrl+Break | Agent | JMap | JCmd | JConsole | JVMMon | API | acquire heap dump | ||

| Sun, HP | 1.4.2_12 | Yes | Yes | Yes | No | No | No | No | No | |

| 1.5.0_07 | Yes | Yes (Since 1.5.0_15) | Yes | Yes (Only Solaris and Linux) | No | No | No | com.sun.tools.attach | Yes (Only Solaris and Linux) | |

| 1.6.0_00 | Yes | No | Yes | Yes | No | Yes | No | com.sun.tools.attach | Yes | |

| Oracle, OpenJDK, HP | 1.7.0 | Yes | No | Yes | Yes | Yes | Yes | com.sun.tools.attach | Yes | |

| Oracle, OpenJDK, Eclipse Temurin, HP, Amazon Corretto | 1.8.0 | Yes | No | Yes | Yes | Yes | Yes | com.sun.tools.attach | Yes | |

| 11 | Yes | No | No | Yes | Yes | Yes | com.sun.tools.attach | Yes | ||

| 17 | Yes | No | No | Yes | Yes | Yes | com.sun.tools.attach | Yes | ||

| 21 | Yes | No | No | Yes | Yes | Yes | com.sun.tools.attach | Yes | ||

| SAP | Any 1.5.0 | Yes | Yes | Yes | Yes (Only Solaris and Linux) | No | No | Yes | ||

| IBM | 1.4.2 SR12 | Yes | Yes | No | No | No | No | No | No | |

| 1.5.0 SR8a | Yes | Yes | No | No | No | No | No | com.ibm.tools.attach | No | |

| 1.6.0 SR2 | Yes | Yes | No | No | No | No | No | com.ibm.tools.attach | No | |

| 1.6.0 SR6 | Yes | Yes | No | No | No | No | No | com.ibm.tools.attach | Yes | |

| 1.7.0 | Yes | Yes | No | No | No | No | No | com.ibm.tools.attach | Yes | |

| 1.8.0 | Yes | Yes | No | No | No | No | No | com.ibm.tools.attach | Yes | |

| 1.8.0 SR5 | Yes | Yes | No | No | No | Yes (PHD only?) | No | com.sun.tools.attach | Yes | |

| OpenJ9, IBM Semeru | 1.8.0 | Yes | Yes | No | No | Yes | Yes (PHD only) | No | com.sun.tools.attach | Yes |

| 11 | Yes | Yes | No | No | Yes | Yes | No | com.sun.tools.attach | Yes | |

| 17 | Yes | Yes | No | No | Yes | Yes | No | com.sun.tools.attach | Yes | |